RIO: Flexible Real-time Robot I/O for Cross-Embodiment Robot Learning

Authors:

Pablo Ortega-Kral*,1, Eliot Xing*,1, Arthur Fender Coelho Bucker1, Vernon Luk1, Jason Kim2, Owen Kwon1, Angchen Xie1, Nikhil Sobanbabu1, Yifu Yuan1, Megan Lee1, Deepam Ameria1, Bhaswanth Ayapilla1, Jaycie Bussell3, Guanya Shi1, Jonathan Francis1,3, Jean Oh1,4,†

Affiliations:

1Carnegie Mellon University

2Delft University of Technology

3Bosch Center for AI

4Lavoro AI Research

*equal contribution, †corresponding author

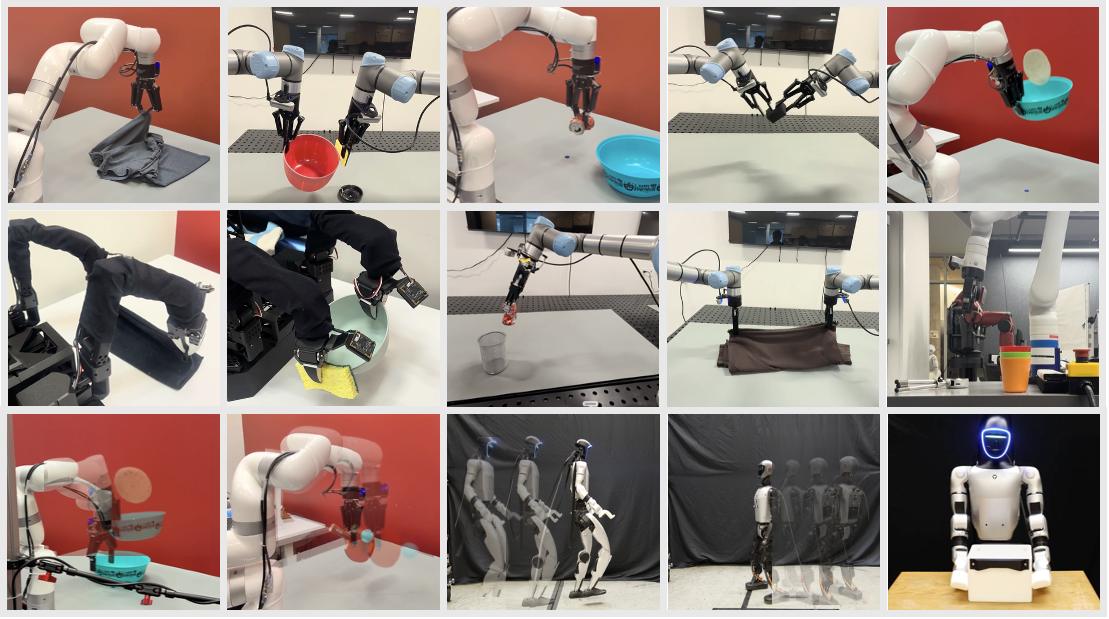

Despite recent efforts to collect multi-task or multi-embodiment datasets, to design efficient recipes for training Vision-Language-Action models (VLAs), and to showcase these models on selected robot platforms, generalist robot capabilities and cross-embodiment transfer remain largely elusive ideals. This cross-embodiment robot learning paradigm remains limited by fragmented data-collection infrastructure, the lack of standardization on versatile data formats, and the significant engineering effort involved in reproducing hardware setups and organizing multiple control stacks for quickly deploying models on diverse robot platforms. As a result, most robot code tends to be highly specific to the exact robot setup that the user decided on, which adds major overhead when attempting to reuse, recycle, or share artifacts between users. To bridge this gap, we present \proj (\PROJ), an open-source Python-based framework that provides flexible, lightweight components for robot control, teleoperation, data formatting, sensor configuration, and policy deployment across diverse hardware platforms and morphologies. \PROJ provides abstractions that enable users to make any choice (robots, sensors, teleoperation interfaces, middlewares, data formats, policies) and to switch between them, with minimal reconfiguration effort. We validate \PROJ on VLA deployment workflows across three morphologies (single-arm, bimanual, humanoid) and four robot hardware platforms with varying grippers and cameras. We showcase policy rollouts by collecting teleoperated data to fine-tune state-of-the-art VLAs, including $\pi_{0.5}$ and GR00T, on household tasks such as pick-and-place, folding, and bowl scrubbing. By open sourcing all our efforts, we hope the wider robotics community can accelerate their pace of robot learning on real-world robot hardware.